Tipping Point

I can't be bothered to do the research, so I'm sure there are plenty of blog posts about this, but it occurs to me that there's no legitimate reason to continue the practice of tipping servers at restaurants.

Some places allow servers to be paid less than minimum wage on the assumption that they'll make above the poverty line via tipping, which is ignorant and unfortunately false sometimes. It's much easier to wrap your head around the idea that servers should be paid the wage they are now, except via their paycheck, and the cost transferred to the customer at a reasonable 15-20%.

Why should this bother anyone? I typically tip 20%, both because I used to work as a bus boy, and recognize the need, but also because it's easier to do the math. As long as what I end up paying is the same, why do I care that the money goes through the check instead of a few disgusting dollar bills on the table? Servers are then guaranteed a certain amount per check, which leads to dependable and predictable income, increasing job satisfaction and reducing turnover.

Ok, you might say it gives them incentive to do a worse job. But honestly, how often have you had terrible service that was a direct result of the server? More often than not, they're as upset as you are if your food is late, or incorrect. And it's not just because of the potential for their tip to be reduced; the whole server-diner relationship becomes somewhat tinged with guilt, the server for the lackluster performance, and the diner for having to tip. And if you feel that you regularly overtip those plundering servers, perhaps you also feel we should extend tipping to other service industries as well, such as paying %20 less at Radio Shack (no, I'm not calling it that), when the service is awful, uneducated and ambivalent, which is always. That's a fair point, but only if it's fairly applied. The way it is now gives the tippER the burden of rating service via cash and the tippEE the burden of relying on the good-heartedness of his or her clientele. I can't tell you how many times someone I've been with has over-emphasized how bad their service was to get out of paying a tip.

Just get rid of the idea already. The only damage it'll do is cause the makers of the 20,000 Tip Calculator iPhone Apps to go belly up.

Live Poultry Fresh Killed

So a while back I was wandering around Boston, and saw this little gem of a sign. I couldn't help but snap a phone-cam shot of it. I thought I'd have a wacky photo to share with the world.

Little did I know that I was hardly the first person to snicker at this particular sign. How unoriginal.

The shop, Mayflower Poultry, apparently does sell chickens that are slaughtered on-site daily. They also sell a variety of merchandise emblazoned with the (ostensibly) famous graphic.

Call me old-fashioned, but I can't help but find the live poultry, fresh killed thong underwear more than a little disturbing.

In Meat-Related News...

Holy crap! There's a catastrophic Slim Jim shortage forecast for the rest of the summer. Now what the hell am I going to snap into?

In not-quite-related news, Oscar Mayer died. Now, I'm a fan of bologna-related humor as much as the next guy, but did the Los Angeles Times' obituary really have to start with "His bologna had a first name. His bologna had a last name. So did he." and end with "No word on whether the funeral procession will be led by the Wienermobile" ?

Too soon, Nintendo!

Jack Black:

Chuck Norris:

The Scream dude:

Harry Potter:

Gotta be Weird Al:

Alvin:

Cleveland from Family Guy (there was also Chris Griffin and Quagmire, but didn't get a snap):

SpongeBob:

I'll be interested to see if they take this one out of the US release:

Update: Here's some more.

How to Tell That Your Novelty Meme is Dead

When the local Hallmark begins selling your hilariously-reviewed

Amazon item, it's probably best to move on. And yeah, the little

card in front of the shirt is a printout of the review.

Wondering what the heck this is all about? let me google that for you.

Send to Pseudonym, c/o Weaselsnake, π weaselsnake towers

This entire page is dedicated to answering the question I have re: the following video:

Prototype trials December 2008 from Chariot Skates on Vimeo.

If you want me to answer that question definitively, I'd be willing to accept reviewer's copies.

Arm Wrestling

With programs like the infamous "Better on Windows" (link withheld) initiative at Asus, coupled with the genuine desire of the manufacturers of small, low-power netbooks to prove an alternative TO Windows, as they're almost entirely focused on providing cheap but good products, what are you to do with the 500-lb Gorilla on your back? If you're Dell, then you have enough clout and power to offer some - SOME - machines that run Linux, but what about Acer, MSI, and Pixel?

Well... how about getting those manufacturers to install CPUs in their machines on which Windows won't run?

The only defense Microsoft has to something like this is to make Windows run on an ARM processor, rather than an x86 processor. This isn't very likely, since Windows doesn't do a great job of supporting even the variances in computer hardware WITH x86 standardization, not to mention the fact that even if Windows itself ran on ARM processors, it wouldn't be much good to anyone using, say, Office or Photoshop. Any software that runs on an ARM Windows would need to be ported to ARM code. Not impossible, but expensive and as companies like Adobe have shown, not fruitful enough to bother.

The other defense is the typical Microsoft offense, which is to lean on these companies financially until they relent and offer Windows, ideally exclusively, which'd mean a return to more expensive x86 CPUs. These companies might not have anything at all to lose from an angry Microsoft so it'd be interesting to see how that shakes out.

With Linux (and specifically Android), you already have an OS that'll run on ARM hardware, and the vast majority of software that people use (though it may suck compared to its commercial counterparts, yes) is open-source and so therefore much more likely to be recompiled to run on a new processor.

I think this is brilliant, and I for one will buy a netbook with a sunlight-viewable screen, ARM processor and Android as soon as my Acer Aspire One goes the way of my DS.

"Fax this to Hannibal at once!"

But how would you do?

If you've received your high school diploma, you may have a fundamental knowledge of physics, mathematics, biology and the like, but how practical is any of that? Sure, you know that electricity exists and how it exists; you may have been tops in your class at grasping the concept of heliotropism or valence electrons; when it comes right down to it, what good is that going to be?

Let's say you wanted to make a clock. I'm not talking about Big Ben or the NIST Atomic Clock, or even anything as accurate as the Tony the Tiger watch you're wearing right now, proudly displaying your ability to send in 3 UPCs and $5.99 S&H. Let's say you wanted to make a rudimentary timepiece that lost less than - let's say - 30 minutes a day. Could you do it?

I couldn't.

I know how the first clocks work, I understand the mechanics and concepts well enough to potentially design one that, with testing, could be as accurate as my challenge. But I'm missing several crucial skills. The first is an ability to metalwork. The second is the technology to produce the specific metals themselves. It seems to me that a lot of this fancy is built on a premise that you'd have the tools to make what you'd like, and the tools to make them, etc. etc.

It's like a game of Civilization. Even if you had the schematics for the Space Shuttle in 1509, you couldn't build one, even after a lifetime's work. Churches and castles took dozens of men several decades to finish, and let's face it, beautiful as they are, they're not particularly sophisticated technology-wise. I was building fortresses with Lego when I was 8.

I'm not bragging here. I happen to know how clocks and many other pieces of technology work. And there's quite a few I don't understand, even on a fundamental level. The computer you're reading this on now, for example. You know that it houses a CPU and RAM. Once this post has entered your computer, what happens to it? How exactly does a CPU work? Many of us have never even seen one. When confronted with a modern microprocessor with millions of transistors (how do THEY work?), clocks are child's play.

Literally.

My introduction to mechanical engineering and the understanding of how machines and other pieces of technology work came in the form of a Channel 4 television series called The Secret Life of Machines by Tim Hunkin and Rex Garrod. These two eccentric engineers (and though I love both, awful television hosts) went through the inner workings of all sorts of common machines, such as the television and elevator. They used unsophisticated animated drawings and large-scale demonstrations of each individual piece of the main device to show you how the things basically worked. In a half hour, one could learn about the electron gun that powers your TV (though obviously leaving off the superfluous bits like the aerial tuner and audio amplifier) well enough to explain to someone else.

I loved these shows and would watch them constantly, as often as they were on, despite there being a grand total of 18 of them. Tim and in particular Rex were hilarious in their enthusiasm for the experiments they were conducting, often prompting surprised smiles and suppressed giggles from either of them when the experiment worked (or didn't). Tim was the main presenter, but Rex was the one who let you connect to it all. Every other episode or so would have a brief monologue from the bearded northerner, explaining how the concept of whatever this week's machine had helped him in a project or installation of some crazy contraption in the past. These non sequiturs could often be the highlight of the show, with the awkward man trying to explain how washing machine motor and linkage fit into his animatronic suit of armor or whatnot.

Tim is also a prolific cartoonist, writing a comic strip called "The Rudiments of Wisdom", which had the same basic premise as the show, except it was obviously much more condensed and unavailable to a growing boy in the USA. His cartoons make appearances in the show both for instructory reasons and also for humor value. They are very very funny at times, especially for fans of very subdued British humo(u)r, but one would be excused for thinking that they are... a bit amateurish.

But you don't have to take my word for it: The entire series is available on the internet, courtesy of The Exploratorium. Do yourself a favor and watch. Who knows? Knowing how a telephone works might save your life, or the one about copy machines might land you a job some day. If nothing else, the reggae version of "Take Five" that opens the show is worthy of a listen.

Architectural Madness

I recently visited the Stata Center at MIT in Boston, and took these photos. The building resembles a crazily-assembled hodgepodge of textures and geometric shapes: it looks altogether unlike any other building anywhere; as though it was a human building designed by martians with only a general description of what our buildings looked like.

I recently visited the Stata Center at MIT in Boston, and took these photos. The building resembles a crazily-assembled hodgepodge of textures and geometric shapes: it looks altogether unlike any other building anywhere; as though it was a human building designed by martians with only a general description of what our buildings looked like.The effect for a casual observer is breathtaking: the building is a bizarre and visually satisfying amalgam (perhaps mishmash is a better term) of colors and textures, weirdly canted angles and shapes that appear jammed together at random.

The building was designed by Frank Gehry, who also designed other wacky architectural masterpieces-slash-modernist-nightmares, including Disney Hall in Los Angeles, and the Experience Music Project in Seattle.

Unfortunately, it appears that the functionality of the building is far less impressive than its visual appeal. There is no understandable layout to the building, and (to an unfamiliar observer, of course), it appears ridiculously easy to get lost in.

Unfortunately, it appears that the functionality of the building is far less impressive than its visual appeal. There is no understandable layout to the building, and (to an unfamiliar observer, of course), it appears ridiculously easy to get lost in.Floor-to-ceiling glass walls in some offices means that privacy is impossible. The otherworldly angles, while striking, make hanging bookshelves well-nigh impossible.

The Wikipedia article on the Stata Center lists other defects, including a lack of soundproofing, a tendency to induce vertigo in its inhabitants, and a giant-sized price tag.

Oh, and it's probably not a good sign that MIT is suing Gehry, for massive design flaws leading to cracked masonry, flooding, and mold.

Oh, and it's probably not a good sign that MIT is suing Gehry, for massive design flaws leading to cracked masonry, flooding, and mold.

The Third Rail

I saw this sign while I was out and about. While I'm generally a fan of obtuse signage, this one seemed a bit odd. Now I now what a third rail is, but for someone not acquainted with subway electrical terminology, this sign lacks the proper amount of actual warning to be useful. And of course it misses the opportunity to use a hilarious illustration of a stick figure being electrocuted.

This is all prelude (as ever) to a reference to a bizarrely comprehensive wikipedia page: Third Rail. Seriously, is there that much to write about direct current? Ah, who am I kidding? I love it.

Why I Love Wikipedia

Wikipedia can be a controversial topic: message-board flamewars pop up with some regularity, with proponents and critics raging about the relative merit of experts versus amateur editors. In an academic setting, Wikipedia is a thorn in the side of many professors who've learned to dread research papers from overly-credulous undergrads who cite Wikipedia as an authoritative source.

Wikipedia can be a controversial topic: message-board flamewars pop up with some regularity, with proponents and critics raging about the relative merit of experts versus amateur editors. In an academic setting, Wikipedia is a thorn in the side of many professors who've learned to dread research papers from overly-credulous undergrads who cite Wikipedia as an authoritative source. However, for a random-fact addict like myself, Wikipedia is a gift from the heavens. Let's take a tour through one of my most recent knowledge browsing expeditions: I started out with a link to Centralia, a town in Pennsylvania, now virtually abandoned due to the vein of coal that is currently on fire, which has been smoldering for more than three decades.

However, for a random-fact addict like myself, Wikipedia is a gift from the heavens. Let's take a tour through one of my most recent knowledge browsing expeditions: I started out with a link to Centralia, a town in Pennsylvania, now virtually abandoned due to the vein of coal that is currently on fire, which has been smoldering for more than three decades.That took me to the article on Mine Fires, which I was shocked to find, are actually relatively common, and can burn for not just decades, but centuries. One mine fire in China has apparently been burning since the 1600s, and China at the moment has hundreds of coal fires running uncontrolled, consuming tens of millions of tons of coal annually (how's about that for greenhouse gas emissions?).

From there, it was a short hop to the article on Peat, which can also burn. Apparently Peat (decayed vegetation matter), covers about 2 percent of the global landmass, and is still used as home heating fuel in parts of Ireland and Finland. The related links sent me to Acid sulfate soil, a form of waterlogged soil, that when exposed to air, creates naturally-occurring sulfuric acid, which can acidify water, killing vegetation and fish, and even undermining the structure of concrete and steel buildings.

Well, I couldn't not investigate what Wikipedia had to say about Waterlogging. I found out that waterlogging can preserve otherwise-perishable artifacts that can tell archeologists much about ancient cultures. Well, on to Archeology! Lots to read (skim) there, but the section on Psuedoarcheology caught my eye. Apparently fictional archeologists don't follow established practice very well (take that, Indiana Jones!).

Well, I couldn't not investigate what Wikipedia had to say about Waterlogging. I found out that waterlogging can preserve otherwise-perishable artifacts that can tell archeologists much about ancient cultures. Well, on to Archeology! Lots to read (skim) there, but the section on Psuedoarcheology caught my eye. Apparently fictional archeologists don't follow established practice very well (take that, Indiana Jones!).But it was the link to the article on Xenoarchaeology that really caught my attention. Apparently this is a still-hypothetical form of the field, which will (or may) study the physical remnants of extraterrestrial cultures. The actual field is currently a haven for fringe theorists and pseudoscientists, but the links to xenoarchaeology in science fiction will definitely merit a return visit.

The related topic of The Mediocrity Principle was a fascinating one: initially put forth by Copernicus, the theory states that there is nothing particularly special or unique about Earth, as compared to the rest of the universe. One of the logical conclusions of such a principle was the Drake Equation, which hypothesized the likelihood of an extraterrestrial civilization developing in our galaxy (and the likelihood we would come into contact with it), based on: how many stars are formed, how many have planets, how many of those can support life, how many of those actually do, and the subset who develop intelligent life, and so on.

So, in the space of a little reading and clicking, I went from an abandoned town that's been on fire for decades, to the numeric possibility of alien civilizations. That's why I love Wikipedia.

I'm a Little Bit Audio, He's A Little Bit Snake - Audiosnake 01

Thrill as the varying degrees of audio quality invade your brain to deliver untold levels of geekiness! Gasp in horror at the in-jokes and unexplained references! Marvel at the mediocre production levels! Yawn as you realize that there's very little to care about!

If you're curious to hear what we sound like, this is the show for you. Feel free to leave us feedback, and we'll feel free to ignore it or read it on the next show.

Enjoy!

Who thought this made sense?

Seen recently while I was out. Seriously, did someone put one (or

both) of these signs up together and think "Yeah, this makes sense.

Nobody will have any problem with this"? At least they didn't use

quotations marks for "emphasis." Then my head might have exploded.

Even without the written hints, I could guess this was a governmental building.

Inventors, Part 3: Mold Saves the World Again

Welcome to the last day of my three-day retrospective on the most important inventors of the twentieth century. If you haven't already, check out the previous posts, where I expounded on agronomist Norman Borlaug and chemist Fritz Haber.

Welcome to the last day of my three-day retrospective on the most important inventors of the twentieth century. If you haven't already, check out the previous posts, where I expounded on agronomist Norman Borlaug and chemist Fritz Haber.Today's inventor is less of a dark horse. Plenty of "most important inventions" lists include the invention of Penicillin as one of the most significant of the twentieth century, and in this case, they get it right.

Prior to Alexander Fleming's discovery of Penicillin, the first anti-biotic, bacterial infections could and did rage through populations. From the bubonic plague to tuberculosis and leprosy, disease that scourged popualtions became easily curable with the advent of readily-available anti-biotics. There is no way to accurately estimate the number of lives saved by the invention of penicillin. Including all of the diseases treated by antiobiotics, hundreds of millions of lives saved by the discovery of the first antibiotic is a reasonable guess.

It's hard to imagine a world where every year thousands of people die from preventable diseases, or die from simple burns or treatable STDs. But were it not for a chance accident with some contaminated laboratory glassware, that's what the world might have looked like.

In 1928, Scottish researcher Alexander Fleming left his lab bench for a two-week holiday. He accidentally left out a culture of staph bacteria, uncovered. A stray spore of a fungus called Penicillium notatum drifted onto the culture plate, setting in motion one of the most fortuitous and momentous discoveries in modern medical science.

When Fleming returned, he found the culture plate, still growing the staph bacteria, but now also home to a growth of Penicillium mold. And, in the circular halo around the mold, a clear section of culture, where no bacteria grew. Fleming realized the mold secreted a substance that killed bacteria, and the rest is history.

Antibiotics were heralded as 'miracle drugs' when they were introduced, and looking back, it's easy to see that the sentiment is far from hyperbole.

The ironic postscript to the antibiotic story is that the prevalence (and overuse) of antibiotics now have the potential to undo all of the improvements antibiotics have pioneered.

Situs Inversus

And what about non-Fig Newtonian?

This property also means that when compressive force is applied to it, it resists the force in that particular spot.

There are a ton of practical uses for this property, but to hell with those:

Inventors, Part 2: Feeding (and Blowing Up) the World

Welcome to day two of my three-day retrospective on the most important inventors of the twentieth century. If you haven't already, check out yesterday's post, where I expounded on one of the most important inventors of the twentieth century: Norman Borlaug. But Borlaug's Green Revolution would have been impossible were it not for a German inventor with a checkered legacy.

Welcome to day two of my three-day retrospective on the most important inventors of the twentieth century. If you haven't already, check out yesterday's post, where I expounded on one of the most important inventors of the twentieth century: Norman Borlaug. But Borlaug's Green Revolution would have been impossible were it not for a German inventor with a checkered legacy.In the early parts of the twentieth century, you could argue that Chile was the most important place in the world. Chilean Saltpeter was one of the raw materials used in the creation of gunpowder and other explosives, and perhaps more importantly, as a fertilizer, it was essential for most developed nations' agriculture.

By the time the early 1900s rolled around, a war had already been fought over Saltpeter (also known as Sodium nitrate). So, during the early parts of World War I, the seas around Chile were hotly contested territory. Eventually, a British blockade of the country was successful, cutting off Germany's supply of Saltpeter.

Enter Fritz Haber, a German chemist. He was instrumental in developing the process by which atmospheric nitrogen could be fixed, creating Ammonia, which could be used both in the creation of artificial fertilizers and explosives.

The Haber Process was eventually reverse-engineered by other countries after World War I, and it is now responsible for supporting one-third of the human population on Earth. Without the fertilizer produced by the Haber Process, agricultural yields would make the current human population wholly unsustainable: some have argued that the Haber process averted a worldwide Malthusian catastrophe. He was awarded the Nobel prize in chemistry for the process.

The Haber Process is still in use around the world. in fact, the industrial production of Ammonia consumes a significant portion of the global energy supply each year, at least 1% to 2% of all human-generated energy.

Haber's legacy, however, is dark: while the Haber Process has reshaped the face of human population, it also greatly prolonged World War I, by allowing Germany to produce explosives, and feed a much larger army. Haber was also a passionate proponent of chemical warfare, arguing strenuously for the use of poisonous gas in trench warfare in World War I, personally developing and overseeing the development and use of Chlorine and other poisonous gasses against soldiers.

Though he was decorated by Germany for his work during World War I, as a Jew, he was forced to flee Germany in 1933 to avoid Nazi persecution. Tragically, scientists at the chemical warfare laboratories he oversaw in the 1920s developed the formulation of Cyanide gas that would later be used in the Nazi extermination camps.

Check back tomorrow for Day 3 of my inventors retrospective: why not keeping some dishes clean might have saved the world..

Inventors, Part 1: Averting Global Starvation

When I was a youngster, I was fascinated by inventors. I was totally enamored of the romantic ideal of a lone enthusiast coming up with an idea no one had ever thought of before, and then changing the world with it. Perhaps it's not surprising that I idolized inventors: it's not like sports stars had any real appeal to the gangly kid with glasses.

When I was a youngster, I was fascinated by inventors. I was totally enamored of the romantic ideal of a lone enthusiast coming up with an idea no one had ever thought of before, and then changing the world with it. Perhaps it's not surprising that I idolized inventors: it's not like sports stars had any real appeal to the gangly kid with glasses.Looking at typical lists of the most important inventions of the 20th century, most people can't help but think of computers, telephones, automobiles, airplanes or various household appliances.

But it seems like those kind of lists are missing the point: even though most people never interact directly with them, there are a few inventions that have radically changed the world.

So, over the next three days, I'm going to provide my shortlist for the three most important inventors of the 20th century. Starting with an agronomist who pioneered the so-called "Green Revolution."

It's a small footnote in history today, but in the middle of the last century, it was widely predicted that the world was headed inexorably towards global famine and the deaths of millions of people. Bestsellers like 1968's The Population Bomb and 1967's Famine, 1975! spelled out dire predictions about the global population and food supply. Those predictions may very well have come true, if it weren't for the efforts of one American agronomist, Norman Borlaug.

Borlaug invented a strain of semi-dwarf, disease-resistant wheat. This invention allowed the global food supply to grow radically, and averted the potential global famine. Conservative estimates say that Borlaug's invention saved the lives of more than 240 million people. For his humanitarian efforts, he was awarded the Nobel Peace Price, the Presidential Medal of Freedom, and the Congressional Gold Medal.

Recently, Borlaug's work has been criticized by Western environmentalists, who decry Borlaug's outspoken support for the use of biotechnology in agriculture and support for large-scale farming. His response to such criticisms:

"Some of the environmental lobbyists of the Western nations are the salt of the earth, but many of them are elitists. They’ve never experienced the physical sensation of hunger. They do their lobbying from comfortable office suites in Washington or Brussels. If they lived just one month amid the misery of the developing world, as I have for fifty years, they’d be crying out for tractors and fertilizer and irrigation canals and be outraged that fashionable elitists back home were trying to deny them these things."Watch for tomorrow's inventor, and find out how a single chemical reaction is now responsible for consuming more than 1% of the world's annual energy supply.

Escort Service

I'm not talking about Sophia from 1992's Indiana Jones and the Fate of Atlantis, a computer-controlled character who would simply follow you from room to room and wait while you did whatever it was you needed to do. You would play AS Sophia from time to time, generally to help Indy out of a jam, but the rest of the time she was there as a sprit guide.

This was innovative at the time (as was most stuff from early LucasArts). There was a time when the idea of a player character period was innovative, following from games like Defender, Pong, Lunar Lander, Asteroids, etc. But here we had ANOTHER character without a 2P controlling her.

But here's the thing. Sophia was dumb. She had strict hard-coded responses to questions asked of her, and when controlling her, was only ever meant to do exactly what LucasArts intended YOU to do with her. That might have taken a while, but since she could only respond to the objects you had her interact with, her responses either advanced you further along the path, or indicated that you were barking up the wrong tree. I'm not saying that Sophia, the character, was dumb, or that Sophia the concept was dumb. That would be wrong on both counts. But Sophia as a piece of computer software, as an actor able to function independently in the game was literally dumb, unable to concieve of and act on information that the player didn't provide.

And that was PERFECTLY fine. LucasArts knew that she didn't need to have intelligence, that they weren't going to be able to give her intelligence, and if they had, it wouldn't have improved the game much. So they went with a gameplay mechanic that made sense in the context of the game, and Sophia is one of the most beloved characters in Gaming. (Beloved in the sense that she's actually charming and interesting, not that she can carry 4,000 Big Guns and jump and kill aliens or whatever.)

Compared to this, Alyx, from 2004's Half-Life 2 is an amazing piece of software wrapped up in the guise of a character. She does interact with you and the world, commenting on your abilities, and helping you shoot... well whatever you're shooting at the time. Going from independently-acting sidekick to scripted drone is seamless, and really provides the gamer with the sense that she's a fully fleshed-out and deep character, 1000 times more interesting and charming than that game's Player, Gordon Freeman (mostly because she actually, you know, talks). Well, of course a game made a decade later is going to be more sophisticated. Alyx is by far the best computer-controlled character in any game I've ever played.

So what's this all about?

Even Valve, the creators of that 5-year-old masterpiece (5 years?? Already?), knew that their artificial intelligence wasn't good enough to completely convince you, and gave Alyx a conservative role in the game, staying behind you for the most part, and hiding, rather than trying to do a full-out assault on whatever the objective was. Even though the computer was ALSO controlling enemies, even a sophisticated character like Alyx isn't able to concieve of strategies and thinking that is humanlike enough to be effective in that situation.

Other developers on the other hand, fresh from their Intro to AI course at the local community college, don't share that same sense of modesty. They have a hubris which tends to ride into the disappointing, giving a share of the game's actual gameplay to their digital creations, rather than leaving it to the CPU sitting between the keyboard and chair. I hate games like this with a passion. But at least those characters are supposed to be helping you.

Enter the escort quest. Someone somewhere decided it'd be a super idea if you, the player, had to at some point "rescue" someone, or bring them from point A to point B, guiding them through whatever deathtraps spanned the two. Valve itself did this in the original Half-Life a couple times, though it never made the game's progression dependent on it. Games like Metal Gear Solid had times where the player would have to navigate whatever tricky situation he was in, while at the same time, making it safe for the important computer controlled robot that he was escorting.

It was at those points where scientists, high-ranking military officers, heads of state, other combat veterans, whatever, turned into the largest sacks of idiocy, showing no survival instinct at all, instead relying on the player to dive between the bullets or electrified floors that they would expertly avoid avoiding any instant your eyes were off them for a second.

No one is going to accuse Konami of being a poor developer of video games, but I would suggest a certain level of hubris in assuming that their Artificial Intelligence was good enough to give the player a rewarding experience in feeling like they were really working with someone to get through a tough situation. Instead you have people who your entire goal for 5 minutes is to keep safe, running around in circles in the path of enemy fire, falling into lava pits, and basically acting like a suicidial seizure victim. That'd be fine with me, if the game didn't require me to keep said victim alive to open a door, or get back to Washington, etc. etc. The worst is getting the person nearly there, having their life bar finally vanish because your camera was pointed at, you know, the goal, only to have them take the opportunity to run head first into the nearest bullet. A fact you are only aware of because the game instantly stops, and tells you Game Over, as though time itself hinged on the survival of this loon with no instinct for self-preservation.

This is bad enough when it's for one or two missions in a game, but for games where it is a large chunk of the action, like Resident Evil 4, it totally saps any desire to keep playing for me. I feel like if Ashley isn't smart enough to keep her head down when confronted with hordes of brain affectionados, then it's probably for the better that the President never see his daughter again. She's just baggage when the world rests on the shoulders of YOU. Taking care of one lobotomy patient while you're supposed to be saving the planet from the end times seems counterproductive, contrived and just plain irritating.

This makes the game not about defending the character from whatever trials await you and them, but about definding the character from themself, which is frustrating and stupid.

So what does Capcom do? Release Dead Rising, of course! A game which is basically a string of escort quests where the only consolation to your charge's suicidal tendency is that the opponents are just as stupid, often sitting there and ignoring you completely while you hit their next-door-neighbor with a shopping cart. Let me be clear: the concept of Dead Rising - swarms of slow-moving, slow-witted enemies and myriad ways to dispose of them - is not good enough to overcome the escort-questyness of the execution (har har).

Until game AI is good enough to simulate human thought, or at least approach it, these missions should be eliminated from all games. This will take nearly forever, which is ok. I'm fine with never seeing another one again. The fact is that AI still isn't good enough - even from top-tier developers in 2009 - to be convincing, and nothing highlights that more than the escort quest. So do yourself a favor, and stop including it in games, and we'll stop noticing.

If you need filler, consider turning your main character into a slow-moving, boring werewolf for 2 out of every 2.5 hours instead. I for one would prefer that epic failure.

[thanks to GameDaily for the image]

Short Film Theatre: Das Clown

Take a seemingly-sweet tale about an old man and a beloved clown doll that comes to life: mix in the elementary school film-strip style (complete with narrator), and apply a liberal dose of over-the-top weirdness and murder, and what do you have? Das Clown, that's what!

Take a seemingly-sweet tale about an old man and a beloved clown doll that comes to life: mix in the elementary school film-strip style (complete with narrator), and apply a liberal dose of over-the-top weirdness and murder, and what do you have? Das Clown, that's what!The short film was the creation of director Tom E. Brown, whose film production company, Bugsby Pictures has a website almost tototally lacking in content, but displaying the most awesome motto ever: Making movies like your mom used to make.

(Bonus random fact: Blues Traveler frontman and harmonica virtuouso John Popper provided the narration for the eight-minute film.)

Though I've never seen It. Maybe if I did, I could convert the hatred to fear. (And then to anger, and then to the Dark Side. I've always wanted Lightning Powers.)

The Perils of Pets

You know those giant-sized goldfish that are so popular in landscaped ponds? Koi, they're called: what the hell are they?

You know those giant-sized goldfish that are so popular in landscaped ponds? Koi, they're called: what the hell are they?Oh. They're carp? Carp? Man, the human race will domesticate anything. They really shouldn't.

For example, Skunks? Skunks?! Has evolution not given you abundant reason to stay the hell away from skunks?

While we're at it, can we just lump lions, tigers, wolfs, dingoes and monkeys together into the category of "things you shouldn't have in your house because they'll probably kill you"? Apparently not. (I'm not kidding about monkeys. Enjoy your Herpes B: progressively ascending paralysis, confusion, coma, multiple organ failure and a 70% death rate. But it's sooo cute.)

Or, 'Fancy Rats.' Fancy rats? Give me a break, it doesn't matter how fancy your rat is, it's still a damn Norway Sewer Rat. Seriously, the brown rat is the most successful mammal on the planet: it has established itself on every continent except Antarctica (and I fully expect to see Antarctic snow rats before long). Does this species really need our patronage?

Now the Gambian Pouch Rat, there's an example of rat put to good use. They find landmines, for heaven's sake, who could argue with that? And they detect Tuberculosis as well as a human lab technician. They're like the seeing-eye dog of vermin. That's an animal that you should keep as a pet. What? Wait, Monkeypox? Okay, nevermind.

Also, some people keep anteaters as pets. But, honestly, I'm pretty sure they're only useful for hilarious anteater-caption images (warning: coarse language).

Pretty Noose

A holdover from the 17th century, neckties are direct descendants of the ascot, itself a descendant of the cravat, which itself is the descendant of the traditional decoration of Croation Mercanaries during the 30-Years War.

But who cares? They're stupid.

Men who wear them now do so only as a matter of tradition; a way to dress for work or occasions that supposedly require that tradition to be effective. People think that men in ties look dashing, or classy, but I always think that people wearing them look like fops - one handkerchief dangling from a cuff away from having a fainting spell when seeing a spider.

And why shouldn't I? That image has been carefully cultivated when looked at from a historical perspective. Nowadays people claim that a necktie is a great opportunity to show your individuality. In case you don't see the irony here, let me be clear: it's about 1/100th as great an opportunity as being able to dress yourself as you see fit. No, instead we basically force men to wear the same, ill-fitting, uncomfortable clothes as everyone else and tell them they have 10 square inches to express themselves (from the available pool of acceptable ties) in an effort to differentiate them from the rest of the IBM Engineers of the 1950s.

And why shouldn't I? That image has been carefully cultivated when looked at from a historical perspective. Nowadays people claim that a necktie is a great opportunity to show your individuality. In case you don't see the irony here, let me be clear: it's about 1/100th as great an opportunity as being able to dress yourself as you see fit. No, instead we basically force men to wear the same, ill-fitting, uncomfortable clothes as everyone else and tell them they have 10 square inches to express themselves (from the available pool of acceptable ties) in an effort to differentiate them from the rest of the IBM Engineers of the 1950s.Some men like this, and to be honest, I can see their point. When you have no personality to speak of, then not having to worry about dressing yourself in a way that projects that personality is great. We have 400 years of tradition to fall back on, so if you wear black pants, white oxford and a tie without Looney Tunes on it, you're going to look "ok".

I'm not claiming I'm a basition of individuality. I hate having to pretend to care about how I'm dressed, and if not having to think about it works for them, great. My beef is with the level of comfort and the type of activity being done in these ridiculous things.

First of all, ties need to be tied, an annoying procedure that can be accomplished in a great many styles, such as the Windsor, the Half-Windsor, the Buckingham, the Elvis, the Ghandi and the Twist. My particular preference is to have someone else do it for me, and then simply make the hole large enough so I can slip my head in and out of it when I need to. Tying ties takes practice, and I for one don't think it's worth it to be good at it.

Second, they're uncomfortable. It's literally tied around your neck. Who the hell thought this was a good idea? My dog's collars are looser than this. For one, it forces you to do up the top button on your shirt, itself uncomfortable for people having to, say, swallow or breathe. Have you noticed that when men get off work they loosen their tie and undo the top button? Why do you think that is?

Finally, it's not appropriate for every job. If your job involves, say, doing maintenance on tractors, working in an assembly line, digging ditches or painting, then you shouldn't be made to wear a tie. I would suggest that my job is a poor candidate for tie-wearing, but no matter. Wear the tie we must, because we must wear the tie.

You might laugh, but it's a real phenomenon. Nevermind the gruesome image of the industrial accident, ties themselves are a disease-transmission vector, since they're a) right in front and down of someone's face and b) almost never washed. In September 07, the hospitals in England outlawed the wearing of ties in hospitals for this very reason. This comes in addition to the fact that the first thing paramedics do when confronted with an unconcious tie-wearer is to, yep, cut off the tie. But it's worth it, we look so cool!

One possible solution to this is to wear what is known as a clip-on tie, that is, one that requires no tying, no strangulation and, when yanked, pops right off. You'll be ridiculed for dressing like a 6-year-old, but that's not a bad solution overall. It's not as good as wearing no tie at all, though, is it? It's like wearing a tie clip, instead of just taking the damn thing off.

Can we get rid of them, please? Workers are more productive when they're happy and comfortable. Let them wear t-shirts and jeans if they want and you'll make more money. If they prefer suits and ties, or feel they have to wear them to sell cars or whatever, then that's fine, just don't make it a mandate in jobs where it's not appropriate. Look no further to the famous historical culture shift between IBM and Microsoft, and look where they are now. Even Iran has banned them, on the grounds that they're a symbol of Western Oppression. Let me repeat that. A symbol of Western Oppression.

The wearing of neckties is on the decline, thankfully, but not in my immediate vicinity. Casual Fridays and places of business where they're not stuck in a time warp have led to the declining membership of tie manufacturers and designers in the Men's Dress Furnishings Assoiciation. I say good riddance.

Tomorrow: Why "dress shirts" should be used as cannon wadding.

So, as a matter of protest, I suggest the ultimate in minimal-effort disdainful neckwear: the Bolo Tie. If anybody gives you guff, just tell them if it's good enough to be the official neckwear of the state of Arizona, it's good enough for me.

Arr, You Vitamin-C-Deficient Scallywag!

Growing up, my mother always exhorted us to eat our fruits and vegetables, or else we'd get Scurvy. Being an elementary-schooler, I didn't know that Scurvy was, in fact, a dietary deficiency in Vitamin C. Actually, the sum total of my understanding of the word was as part of the timeless (cartoon) pirate lingo: "You scurvy scallywag!" I think at one point I was convinced that not eating your vegetables would result in me becoming a pirate.

Growing up, my mother always exhorted us to eat our fruits and vegetables, or else we'd get Scurvy. Being an elementary-schooler, I didn't know that Scurvy was, in fact, a dietary deficiency in Vitamin C. Actually, the sum total of my understanding of the word was as part of the timeless (cartoon) pirate lingo: "You scurvy scallywag!" I think at one point I was convinced that not eating your vegetables would result in me becoming a pirate.The disease was, apparently, quite common among sailors and pirates in the seventeenth and eighteenth century, but the symptoms are quite a bit less pleasant than a charming West Country accent: spotted skin, spongy, bleeding gums, eventually leading to wholesale tooth loss, open pus-filled sores, immobility and death. Being a simple dietary deficiency, the disease could be cured by daily consumption of fruits or vegetables, especially citrus fruits (which were unfortunately, unavailable on long sea voyages).

One of the most common solutions to shipboard scurvy came as part of the solution to another unpleasant facet of life on board a ship: filthy, stagnant drinking water. As seawater was undrinkable, sailors and pirates needed to haul all of their fresh water with them, in wooden casks. Unfortunately, this stagnant water quickly grew algae and became filthy and unpalatable. Grog, a mixture of the stagnant water and rum, made the water more palatable (and avoided the predictable consequences of handing out straight rum to bored sailors and pirates). Eventually, the British Navy also added citrus juice (usually lime) to the mix, and unexpectedly, the problems with scurvy almost disappeared. This also led to the infamous derogatory epithet aimed at British sailors: "limey!"

Vitamin C deficiency is almost unknown in the animal kingdom: virtually all mammals can synthesize Vitamin C, and don't need to find it in the environment. Along with other primates, guinea pigs are virtually the only other mammal that does not synthesize their own L-ascorbic acid. Well, fruit bats, too. But I don't consider bats to be mammals. Instead I tend to think of them as horrible unearthly hellspawn that have no business existing at all. (So not an exact taxonomy as such.)

Serious cases of scurvy are all but unknown in the modern world, but apparently, a peculiar diet can lead to inadvertent self-imposed scurvy. So eat your fruits and vegetables. Yarr!

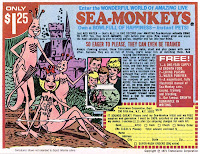

Sea-Monkeys: a Bowlful of Happiness

Ah, the joyous miracle of life. I'm referring, of course, to the miracle of Sea Monkey life. For millions of children, the first experience with playing god began with an advertisement in the back of a comic book, and ended with a slurry of dead Sea Monkeys dried into a hard paste on the front of a dresser. Or was that just me?

Ah, the joyous miracle of life. I'm referring, of course, to the miracle of Sea Monkey life. For millions of children, the first experience with playing god began with an advertisement in the back of a comic book, and ended with a slurry of dead Sea Monkeys dried into a hard paste on the front of a dresser. Or was that just me?The original mail-order pets, Sea Monkeys are a fascinating biological anomaly: a variant species of brine shrimp that evolved in salt lakes and areas that saw alternating periods of moisture and evaporation. Their unusual heritage meant that they could be easily induced to enter cryptobiosis, a state of suspended animation that allowed the shrimp to be dried and packaged along with a packet of food and salt, which when combined with water, would re-animate the dormant creatures.

(Another creature capable of cryptobiosis, the Tardigrade, or 'Water Bear,' a microscopic eight-legged organism, is perhaps the hardiest organism on earth, a polyextremophile that has been shown to survive temperatures close to absolute zero, minutes in boiling water, decades without water, exposure to 1000 times the radiation that would kill a human, and even the vaccuum of outer space.)

Sea Monkeys were notable for their outlandish advertisements which illustrated anthropomorphic Sea Monkey creatures which bore no resemblance to actual brine shrimp. Nowadays, the Sea Monkey franchise isn't just limited to aquariums: runaway merchandising means you can now get Sea Monkey habitats in wristwatch form, or even on a necklace.

Sea Monkeys were notable for their outlandish advertisements which illustrated anthropomorphic Sea Monkey creatures which bore no resemblance to actual brine shrimp. Nowadays, the Sea Monkey franchise isn't just limited to aquariums: runaway merchandising means you can now get Sea Monkey habitats in wristwatch form, or even on a necklace.Behind all the hype and biological wizardry was Harold von Braunhut, one of the pioneers of the garish advertisements that filled the back pages of early comic books. He was also the inventor of the X-Ray Specs novelty, and many other novelties like 'crazy crabs,' and 'invisible goldfish.' Unfortunately, von Braunhut was also a white supremacist and ardent neo-Nazi supporter.

Harlan Ellison's Voice: Way Over the Edge

Harlan Ellison likes to hear himself talk. Now that's hardly an earthshattering conclusion: Ellison's abrasive, vociferous and frequently litigious nature have been fairly well-documented online. But it was only recently that I actually heard Ellison's smug, self-satisfied attention to his own work.

Harlan Ellison likes to hear himself talk. Now that's hardly an earthshattering conclusion: Ellison's abrasive, vociferous and frequently litigious nature have been fairly well-documented online. But it was only recently that I actually heard Ellison's smug, self-satisfied attention to his own work.The Voice From the Edge: Volume 1 is the first of two audiobook collections of Harlan Ellison's short stories, read by the author. Now, I've always like Ellison's stories, and his personality was never a terribly important factor: who cares if the author is a jerk if the stories are good?

Here's the relevant quote from the back of the box: "...only aficionados of Ellison's singular work have been aware of another of his passions ... he is a great oral interpreter of his stories."

Well, I certainly wouldn't call his interpretation great, but there's no arguing that Ellison is a passionate interpreter of his own work. So passionate, in fact, that virtually every word is screamed, shouted or howled. His delivery is most akin to a continuously rising crescendo of shrieking: a pompous, overblown, overacted cacophony of undirected passion.

The bombastic, over-the-top delivery is a bizarre contrast to Ellison's actual storytelling. I always imagined Ellison's authorial voice as dry, understated: sardonic, certainly, but letting the fantastic nature of story speak for itself. Read out loud by Ellison, however, every description and every event is given the subtly of a sledgehammer blow to the forehead. By the end of any given story in this collection, the madcap delivery has reduced the essence of the tale to clownish buffoonery.

I'm generally a fan of audio books: they don't work well for every genre or author, but when done well, and interpreted well, they can be a fantastic experience. This collection is not a fantastic experience. It is an embarrassing performance, much like an actor, so praised for his performance in one venue, who decides to release an album (I'm looking at you, Nimoy).

Ellison may be a fantastic author, but he's a poor narrator. I guess the best you could say is that Harlan Ellison likes to hear himself talk.

I'm not sure what it is that makes some people oblivious to their various obvious deficiencies, but they're in full force on librivox. Some are excellent - you could almost imagine them as professional readers, where some are just awful, and you wonder why they've been the ones to read 50% of the books on the site.

Three eggs. ANY STYLE.

Once christened the International House of Pancakes, a change of branding and marketing in the early part of this decade revitalized the struggling restaurant chain and brought with it one of the most surprising turnarounds the restaurant industry has ever seen.

Gone are the various flags etched into the partitions between booths, the stoic serif font of the logotype. Replaced with a more cozy and modern interior look and a bulbous sans-serfif font: IHOP. One could almost forget what it originally stood for, though I won't.

(Here's the clip that inspired this post. Warning: Satire)

The famous blue roof is an icon; a lighthouse to the road-weary traveller looking for a place to eat where there's a carpet, soft lighting and a predictable atmosphere.

And then there is Denny's. A rancid red-on-yellow sign points the way, almost as if to say, "Look. You're not going to do better." The food at Denny's is mediocre, there's no doubt of that. It's something intangible though, a feeling I get more than any objective "score". The food is simply substance, necessary to go on living (though not a particularly pleasant life for a few hours afterwards), whereas at IHOP, the food is a meal to be shared and experienced.

When we were in high school, the localest Denny's for a time hosted most of our little get-togethers, being one of the few 24-hour places around. Which gave rise to our own personal slogan of "You don't go to Denny's. You end up there."

And it's true. I've never left my house in an earnest attempt to find a Denny's and eat there. It's simply a product of elimination. What's open near where we are?

And trust me. NEVER order the Moons Over My-Hammy.

Clearly Obsessed

So, without further ado, I present the completely transparent Mac SE:

So pretty.

With the right fabrication skills, you could have a carbon fiber mac. Now THAT'd be badass.